©DK | All Rights Reserved

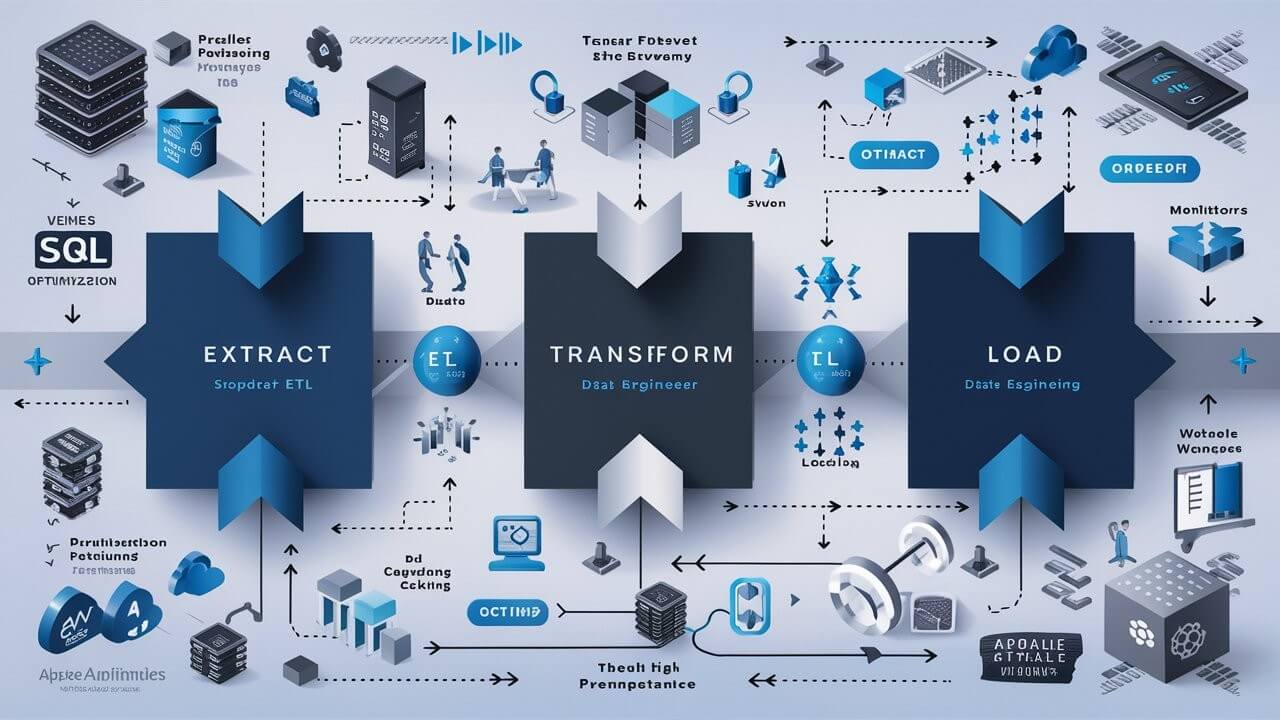

A crucial aspect of data engineering: optimising ETL (Extract, Transform, Load) pipelines for better performance. Optimising ETL pipelines ensures that your data processes run smoothly and efficiently. This leads to faster data availability, reduced costs, and better decision-making capabilities.

Remember, optimising ETL pipelines is an ongoing process. By implementing these strategies and constantly iterating, you can ensure your data pipelines run like well-oiled machines, delivering valuable insights faster and more efficiently.

ETL stands for Extract, Transform, Load. It's a process that involves:

Optimising these steps is vital to ensure that your data is accurate, timely, and useful for analysis.

Just like a car, ETL pipelines can have weak points that slow everything down. Profiling tools can help pinpoint these bottlenecks, whether it's inefficient queries, excessive data movement, or resource limitations.

Imagine processing data in multiple lanes instead of just one! By leveraging parallel processing techniques, you can significantly reduce processing time for tasks that can be run concurrently.

Think of a giant library – it would be a nightmare to search every book at once. Partitioning large tables into smaller, manageable chunks allows for faster querying and data manipulation.

Well-written SQL queries are the secret sauce for efficient data transformation. Utilise indexes, proper filtering techniques, selecting only required columns and optimised join operations to squeeze the most performance out of your queries.

Data that's accessed frequently can be cached, essentially creating a readily available copy for quicker retrieval. This can significantly improve performance, especially for repetitive tasks.

Just like any complex system, ETL pipelines require constant monitoring. Utilise monitoring tools to track performance metrics and identify areas for further optimisation.

Instead of reloading all data every time, use incremental loads to update only the data that has changed. This reduces load times and resource usage.

Optimise your transformation logic by minimising complex operations and using efficient algorithms. Pre-compute aggregations when possible.

Monitor and manage the resources (CPU, memory, I/O) used by your ETL processes. Ensure that they are well-tuned and not overloading your systems.

The landscape of ETL tools is constantly evolving. Consider exploring recent frameworks like Airbyte, dbt, or Stitch that offer features like modular design, cloud-native architecture, and visual interfaces, all of which can contribute to streamlined pipeline development and improved performance.

Use orchestration tools like Apache Airflow, Prefect, Dagster to schedule and manage your ETL tasks efficiently.

Implement monitoring and logging to track the performance of your ETL pipelines. Tools like Prometheus, Grafana ELK Stack can help you identify and address bottlenecks.

By implementing these optimization strategies, you can transform your data pipelines from sluggish beasts into high-performance engines. Efficient ETL processes not only save time and resources but also ensure that your organization has access to accurate, timely data for making critical business decisions.

Remember that optimization is not a one-time task but an ongoing process. Regularly revisiting and refining your ETL pipelines will help you stay ahead in the ever-evolving landscape of data engineering.

©DK | All Rights Reserved